Thank you for your feedback, SylenThunder, and I appreciate your continued engagement in this discussion.

I want to clarify that my main concern is not to focus on the specific hardware I’m using, which I’m not ready to disclose at this time. My intention is to keep the discussion open and centered around the differences in performance between version 1.0 and the previous alpha versions, rather than on the specifics of my setup.

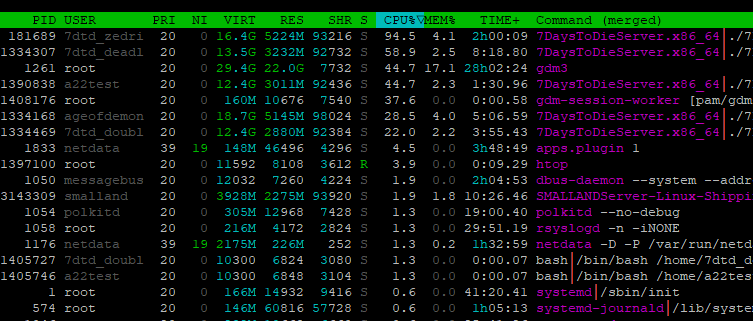

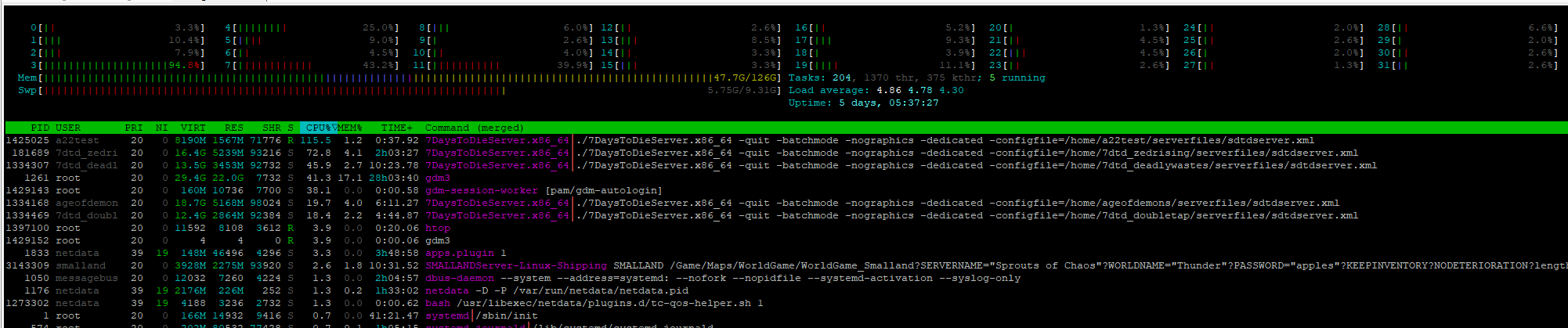

The key issue here is that I’m running various versions of 7DtD on the exact same hardware, yet the performance difference is vast. For instance, where a21.2 averaged around 16% CPU usage at idle, version 1.0 is now averaging 77% under similar conditions. This is significant, especially considering that I’m running about 8-10 maps simultaneously on this machine, depending on the week of the month. The cumulative CPU usage is becoming overwhelming, which wasn’t an issue with the alpha versions.

To provide somewhat more context, I’m running the 7DtD dedicated servers close to bare metal, on Linux, no VM involved but I'm using Docker. All of my instances are running on the same machine, hence obviously also the same OS environment for all instances of, and regardless of, 7DtD version. I’m genuinely trying to understand what might be causing this increase in CPU usage — whether it’s related to TFP using new CPU instructions or optimizations, or something else entirely. I’m not looking to point fingers but to have a constructive discussion to find a solution and restore stable performance.

Since you mentioned the importance of providing logs, could you please advise on what specific logs or parts of the logs I should provide? I’m asking because I can’t see anything in the logs that directly relates to how the 7DtD servers are performing. The logs seem to contain more static information on what's loaded at start and what's changed during game play. I want to make sure I’m giving the most relevant data to help us better understand what might be happening.

Of course, the answer could simply be that nothing significant changed between the versions, and that no one else is experiencing what I am. If that’s the case, then this is likely a problem specific to my setup, and I’ll need to focus on resolving it on my end.

Thank you again for your input, and I’ll review the link you provided to see how your setup compares.