You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

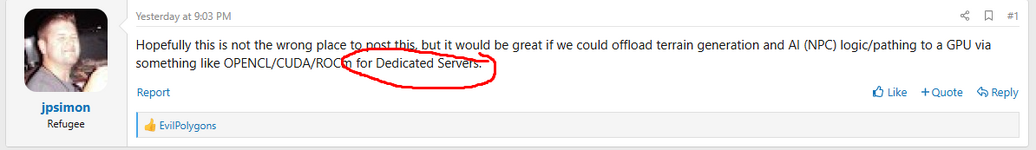

GPU Acceleration for AI & Terrain Generation on Dedicated Servers

- Thread starter jpsimon

- Start date

EvilPolygons

Survivor

A dedicated server can use a simple graphics card, as it's never used. Even a Trident T9000i is sufficient for displaying logs on the screen (though you can't install it on a modern motherboard). Do you want to upgrade all dedicated servers?

I think you're missing the point. He's asking if particularly intensive tasks can be offloaded from the CPU to a GPU for faster and more efficient processing. The Tensor cores on an RTX card can be used for MUCH more than just AI-accelerated rendering. Physics calculations and advanced NPC behavior could both benefit greatly from leveraging Tensor core technology.

You didn't read carefully.I think you're missing the point. He's asking if particularly intensive tasks can be offloaded from the CPU to a GPU for faster and more efficient processing. The Tensor cores on an RTX card can be used for MUCH more than just AI-accelerated rendering. Physics calculations and advanced NPC behavior could both benefit greatly from leveraging Tensor core technology.

So, I'll repeat, a dedicated server may not have a powerful video card. It's not a client.

EvilPolygons

Survivor

You didn't read carefully.

View attachment 36666

So, I'll repeat, a dedicated server may not have a powerful video card. It's not a client.

That's not the point. If the server had some sort of CUDA support, then processor intensive operations *could* be offloaded onto an RTX card's Tensor cores, improving server performance. It's not about requiring a powerful video card, it's about supporting powerful video cards to improve performance.

If a person has an RTX card they want to throw into their server to improve performance, then it would be great if that sort of thing was supported. There's not much that Tensor cores can't accelerate, tbh. I've used my RTX card for everything from texture & height map generation, to physics simulations, to AI upscaling for old movies.

I'm not sure why you're so opposed to idea of people using Tensor cores to improve server performance. Tensor cores basically improve everything.

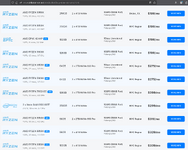

I think the point is, that there are VERY few servers having even a decent video card. That is simply not nescesary for a server. Go check any hosting company that rents out dedis or even just gamehosters. An excpensive video card that basically does nothing is not part of those in 99% of the cases. So investing dev time to support that very few would be a waste of time.That's not the point. If the server had some sort of CUDA support, then processor intensive operations *could* be offloaded onto an RTX card's Tensor cores, improving server performance. It's not about requiring a powerful video card, it's about supporting powerful video cards to improve performance.

If a person has an RTX card they want to throw into their server to improve performance, then it would be great if that sort of thing was supported. There's not much that Tensor cores can't accelerate, tbh. I've used my RTX card for everything from texture & height map generation, to physics simulations, to AI upscaling for old movies.

I'm not sure why you're so opposed to idea of people using Tensor cores to improve server performance. Tensor cores basically improve everything.

It's cheaper to install a second processor.If a person has an RTX card they want to throw into their server to improve performance

jpsimon

Refugee

It may be cheaper to install a second processor, however, the latency penalty would be tremendous going from one to the other.It's cheaper to install a second processor.

@Fritzl - You are correct, however, that does not make it impossible to add one to a server that someone is running at home, or finding one to rent:

As @EvilPolygons stated, it's not about requiring GPU's in dedicated servers, it is about supporting them to offload tasks that a GPU would be better at.

It is my belief that GPU acceleration of gameserver tasks will be "standard operating procedure" in the future and seeing how much a beefy computer can still struggle with NPC generation and pathfinding during horde night, a GPU's massive parallel processing compute capabilities can assist with this.

Horde nights struggle not because CPU is weak. They struggle because alghorithms are not tested and optimized for big player counts.

Algorithims with quadratic or worse scaling are not something that can be compensated with hardware.

If you want it improved, best approach is to gift devs structured profiling data and pray. Or find a programmer to rewrite it, big servers are a tiny minority, not something that will generate money for devs.

Algorithims with quadratic or worse scaling are not something that can be compensated with hardware.

If you want it improved, best approach is to gift devs structured profiling data and pray. Or find a programmer to rewrite it, big servers are a tiny minority, not something that will generate money for devs.